An interactive public installation with smartphones, Fête des Lumières, Lyon, décembre 2014

Overexposure is an interactive work bringing together a public installation and a smart phone application. On an urban square, a large black monolith projects an intense beam of white light into the sky. Visible all over the city, the beam turns off and on, pulsating in way that communicates rigor, a will to communicate, even if we don’t immediately understand the signals it is producing. On one side of the monolith, white dots and dashes scroll past, from the bottom up, marking the installation with their rhythm: each time one reaches the top of the monolith, the light goes off, as if the marks were emptying into the light. On a completely different scale, we see the same marks scrolling across the smartphone screens of the people in attendance, interacting with the work, following the same rhythm. Here, it is the flash of the smartphones that releases light in accordance with the coded language. Because these are in fact messages that are being sent—in Morse code, from everyone, to everyone and to the sky—and that we can read thanks to the super-titling that accompanies the marks. Using a smartphone, anyone can send a message, saying what they think and therefore presenting themselves, for a few moments, to everyone, to a community sharing the same time, the same rhythm. And we can take the pulse of an even larger community—on the scale of the city and in real time—through a map of mobile phone network use, which can be visualized on one side of the monolith or via smartphone.

From an individual device (smartphone) the size of a hand to a shared format on the scale of the city, a momentary community forms and transforms, sharing a space, a pace, the same data, following a type of communication whose ability to bring together through a sensory experience is more important than the meaning of the messages it transmits or their destination, which is lost in the sky.

(Photos: Samuel Bianchini)

Credits

An Orange/EnsadLab project

A project under the direction of Samuel Bianchini (EnsadLab), in collaboration with Dominique Cunin (EnsadLab), Catherine Ramus (Orange Labs/Sense), and Marc Brice (Orange Labs/Openserv), in the framework of a research partnership with Orange Labs

“Orange/EnsadLab” partnership directors: Armelle Pasco, Director of Cultural and Institutional Partnerships, Orange and Emmanuel Mahé, Head of Research, EnsAD

- Project Manager (Orange): Abla Benmiloud-Faucher

- IT Development (EnsadLab): Dominique Cunin, Oussama Mubarak, Jonathan Tanant, and Sylvie Tissot

- Mobile network data supply: Orange Fluxvision

- Mobile network data processing: Cezary Ziemlicki and Zbigniew Smoreda (Orange)

- SMS Server Development: Orange Applications for Business

- Graphic Design: Alexandre Dechosal (EnsadLab)

- In situ installation (artistic and engineering collaboration): Alexandre Saunier (EnsadLab)

- Lighting and construction of the installation structure: Sky Light

- Wireless network deployment coordination: Christophe Such (Orange)

- Communication: Nadine Castellani, Karine Duckit Claudia Mangel (Orange), Nathalie Battais-Foucher (EnsAD)

- Mediation: Nadjah Djadli (Orange)

- Project previsualization: Christophe Pornay

- Assistant: Élodie Tincq

- Message moderators: Élodie Tincq, Marion Flament, Charlotte Gautier

- Production: Orange

- Executive Production: EnsadLab

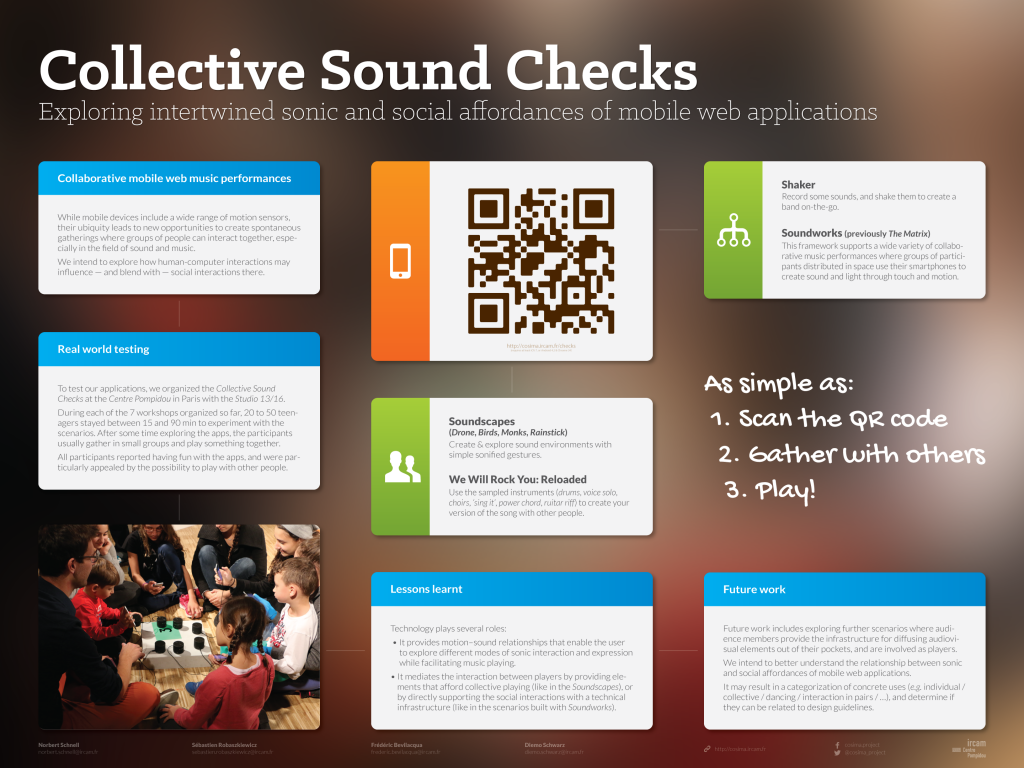

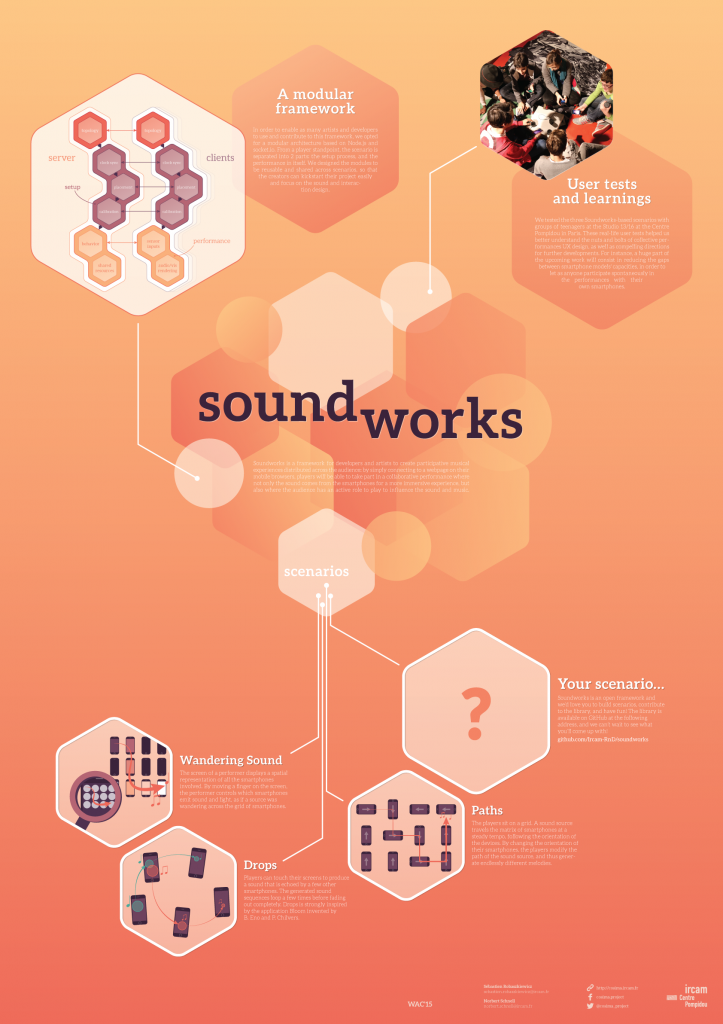

Research and development for this work was carried out in connection with the research project Cosima (“Collaborative Situated Media”), with the support of the French National Research Agency (ANR), and participates in the development of Mobilizing.js, a programming environment for mobile screens developed by EnsadLab for artists and designers