Le prix Radar de l’innovation sélectionnera les meilleurs innovateurs soutenus par le programme européen H2020 pour l’année 2018.

L’équipe Orbe a été sélectionnée grâce à nodal.studio, notre plateforme Web pour les créateurs numériques.

Vous pouvez nous soutenir en votant ici : https://ec.europa.eu/futurium/en/industrial-enabling-tech-2018/orbe

Orbe a réalisé nodal.studio dans le cadre des projets de recherche CoSiMa (ANR) et rapidmix (h2020) en collaboration avec l’équipe ISMM de l’IRCAM-STMN.

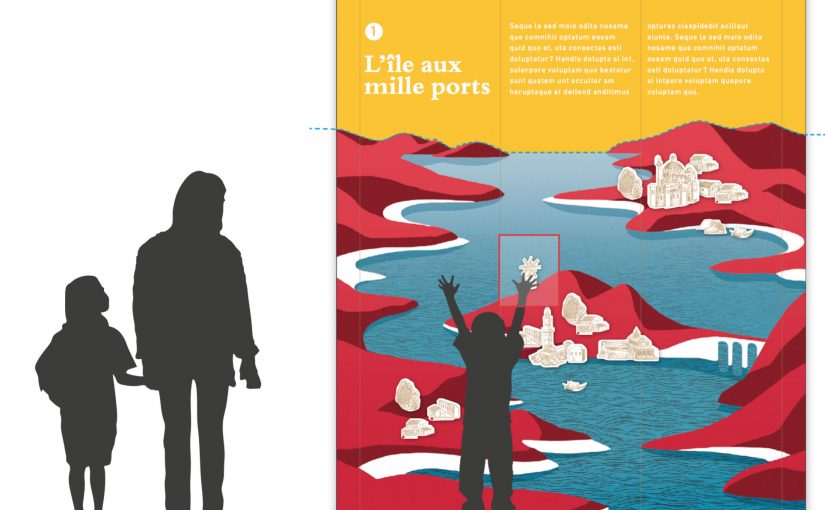

Avec nodal.studio, vous pouvez concrétiser vos idées et construire votre projet avec un outil de création accessible. Nul besoin d’installer une application ou un plugin, il suffit d’accéder à nodal.studio avec votre navigateur Web. Pour en savoir plus sur nodal.studio, suivez ce lien https://nodal.studio/

The Innovation Radar Prize will select the top innovators supported by the h2020 european program during the past year.

Orbe has been selected thanks to Nodal.studio, our web platform for digital creatives.

Would you help us and vote for us at https://ec.europa.eu/futurium/en/industrial-enabling-tech-2018/orbe

Orbe created nodal.studio with the help of the research projects CoSiMa (ANR) and rapidmix (h2020) in collaboration with the ISMM team of IRCAM-STMN.

With nodal.studio , you can concretize your ideas and build your project with an accessible authoring tool. No need to instal any application or plugin, just access nodal.studio with your web browser. To learn more about Nodal.studio, follow this link https://nodal.studio/