During IRCAM’s open house on June 6th, CoSiMa presented two different projects, Collective Loops and Woodland.

Collective Loops

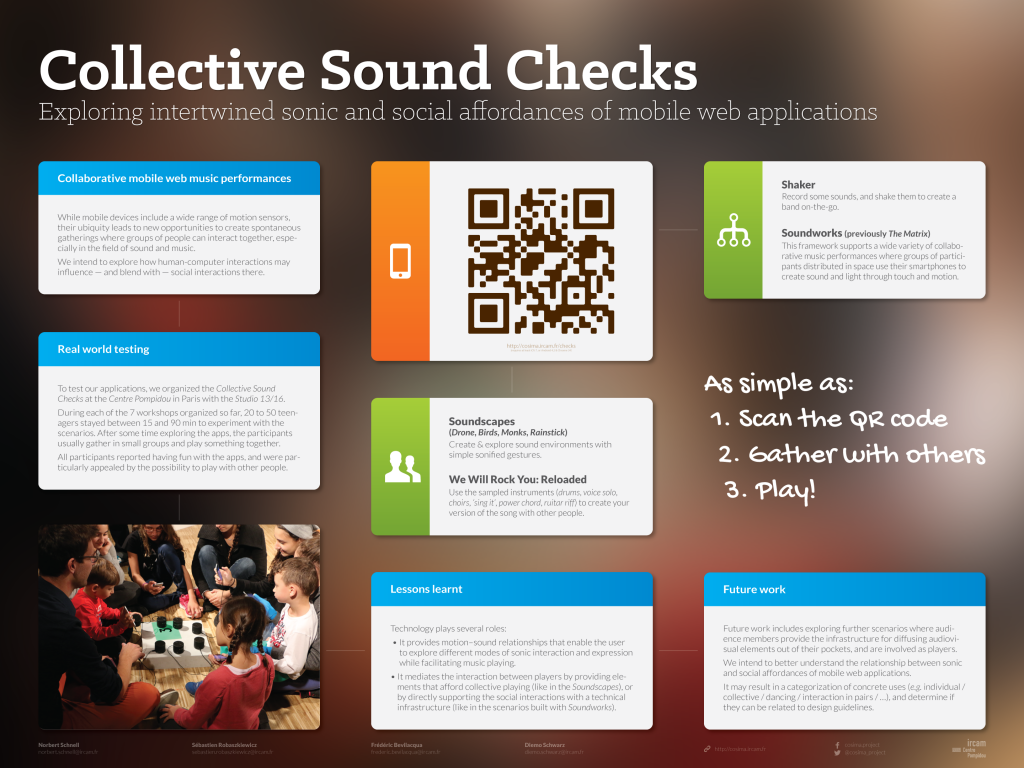

Collective Loops is a collaborative version of an 8-step loop sequencer. When visitors access the webpage of the installation with their smartphone, they are automatically assigned to an available step in the sequence loop, and their smartphone plays a sound when it is their turn. The participants control the pitch of the sound through the inclination of their smartphones. The participants are invited to collaboratively create a melody of 8 pitches that circulates in a steady tempo over their smartphones.

A circular visualization of the sequencer is projected on the floor. The projection consists of a circle divided in 8 sections that light up in counterclockwise circular movement synchronized with the sounds emitted by the smartphones. Each section of the projection is further divided into 12 radial segments that display the pitch of the corresponding sequence step (i.e. controlled through the inclination of the participants smartphone).

The 8 first participants who connect to the sequencer have a celesta sound, the 8 following can play with a drum kit, the 8 last have a bass sound. All together, 24 players can create complex rhythmic and melodic patterns.

Woodland

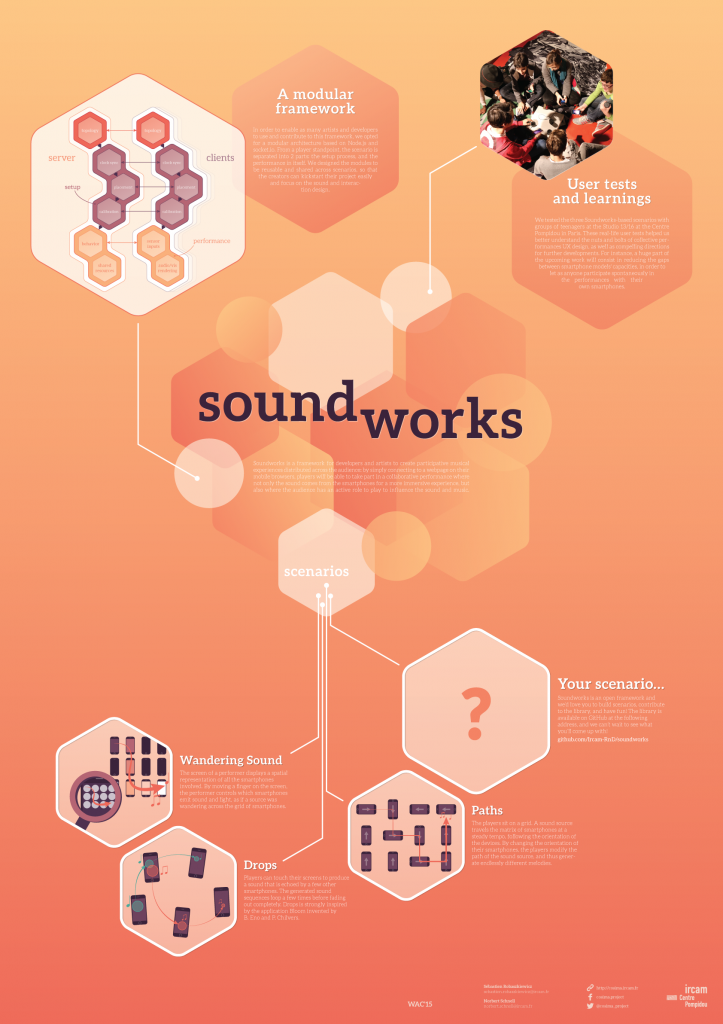

Woodland is a very early stage prototype that aims at explaining how natural audio effects (such as reverb) are created in the natural environment. For this, we create a setting where each participant is a tree in a forest. At some point, a designated player “throws a sound” in the forest by swinging his / her smartphone upwards. After a few seconds of calculations, the sound falls on one tree; then we hear the first wave of resonances when the sound reaches the other trees; and so on recursively until the sound ultimately vanishes.

In order to make people understand what is going on, we can control several parameters of the simulation such as the speed of sound in the air, the absorbance of the air, the type of sound (with a hard or soft attack), etc. That way, if we set the parameters to be similar to the natural setting, we hear the same reverb as we would hear in a forest. But if for example we slow down the speed of sound, we can hear a very slow version of how this natural reverb is built, hearing each echo one by one.

This very first prototype was very promising, and further developments might include a visualization on the floor of the different sounds that bounce from trees to trees to create that reverb effect.