On February 6th, 7th and 8th, La Ville de Paris and the À Suivre association organized the 4th edition of Paris Face Cachée, which aims at proposing original and off-the-wall ways to discover the city. The CoSiMa team led the workshops Expérimentations sonores held at IRCAM on February 7th.

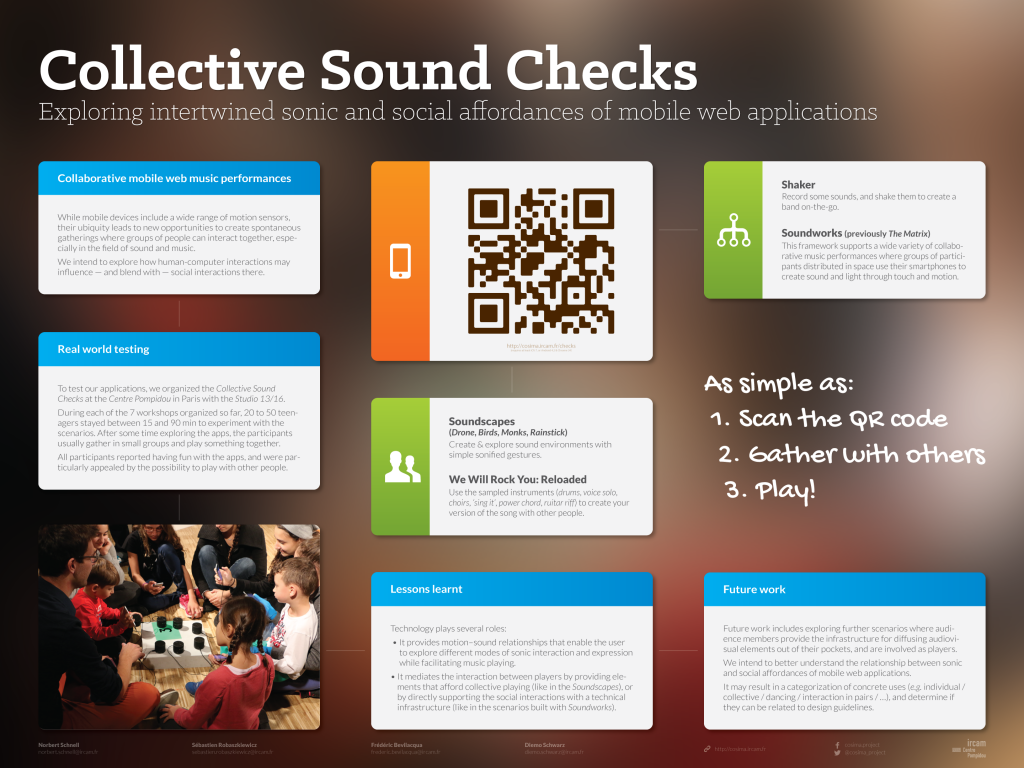

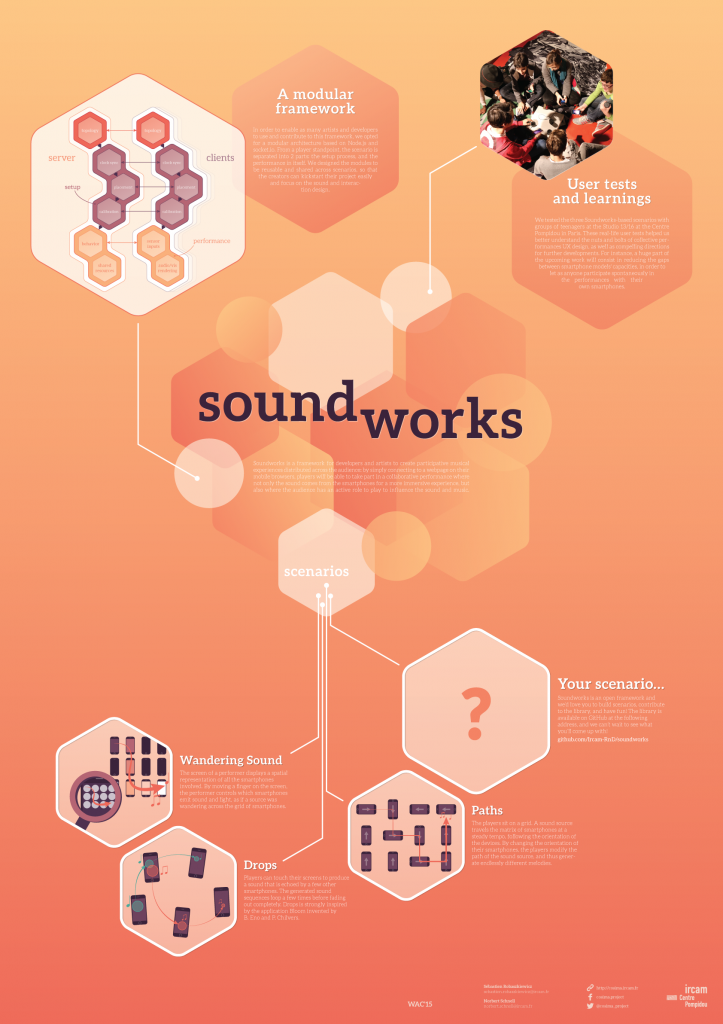

Three groups of 24 participants could test the latest web applications we developed. The audience first tried a few soundscapes (Birds and Monks) to get familiar with the sound-motion interactions on their smartphones, and to learn how to listen to each other while individually contributing to a collective sonic environment.

In the second part of the workshop, we proposed the participants to take part in the Drops collective smartphone performance. While the soundscapes also work as standalone web applications (i.e. they do not technically require other people to play with), Drops is inherently designed for a group of players, where the technology directly supports the social interaction. The players can play a limited number of sound drops that vary in pitch depending on the touch position. The sound drops are automatically echoed by the smartphones of other players before coming back to the player, creating a fading loop of long echoes until they vanish. The collective performance is accompanied by a synchronized soundscape on ambient loudspeakers.

The performance is strongly inspired by the mobile application Bloom by Brian Eno and and Peter Chilvers.

Below are a few pictures from the event.